Enhanced Reality: Combining AR and AI to Improve Everyday Life

Concepts for AI-powered glasses that understand what you're doing and help in the moment. Gamified chores, hands-free cooking, contextual task tracking. Presented alongside Snap at AWE 2025.

make boring tasks feel more like a game?

give people a context-aware AI companion that helps in the moment?

surface the right tool at the right time without requiring user input?

label and understand anything in a scene without custom-trained models?

The Tech Behind It

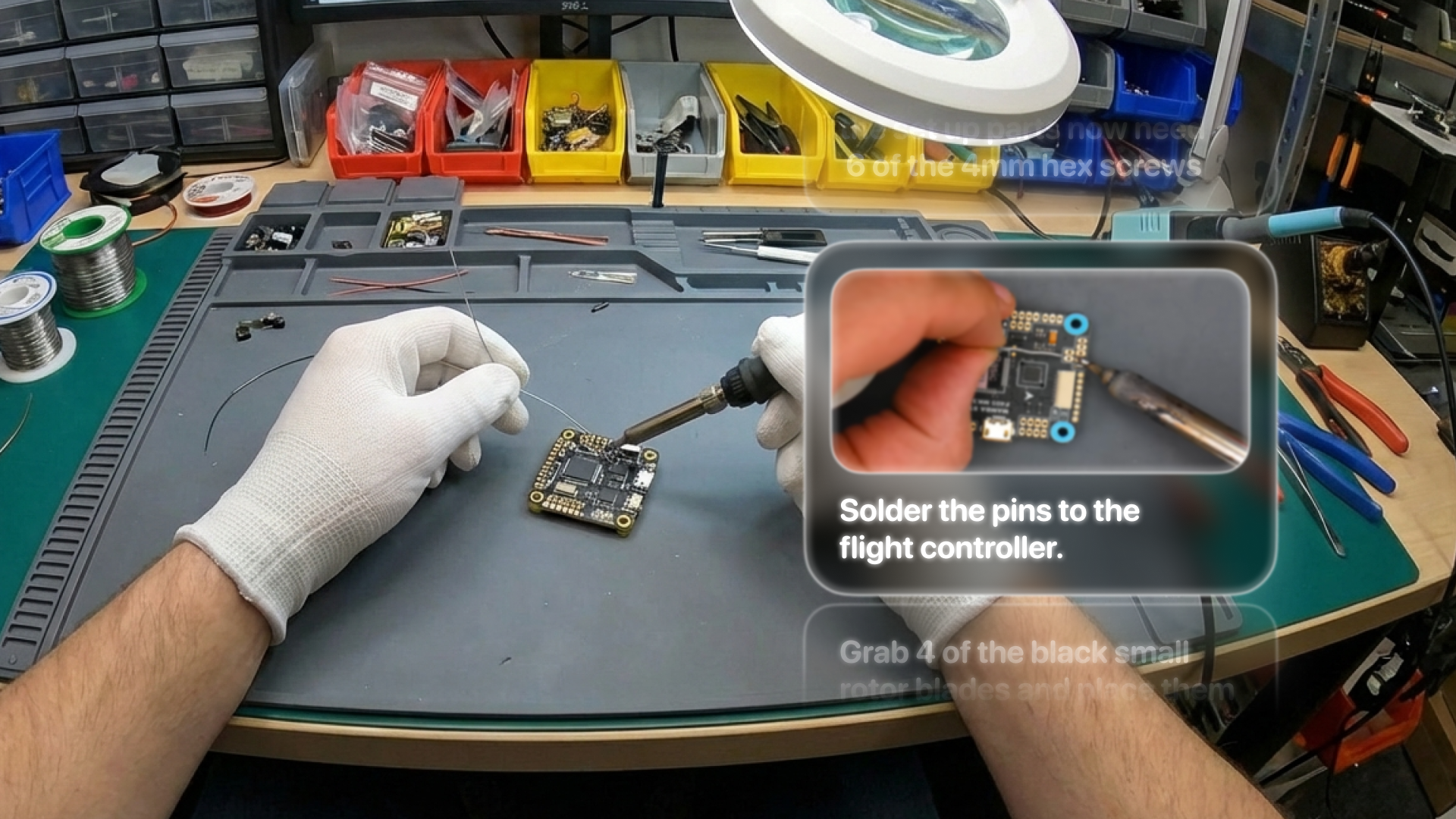

I started building with vision-language models early, before most had access to multimodal APIs. The key idea: instead of pre-programmed triggers, the interface sees what's happening and responds. It can recognize objects, track task progress, and surface relevant info without you asking. The Generative Spatial Anchors prototype shows this: generating labels and coordinates on the fly, anchored to real objects, no custom training required.

Impact

These ideas directly informed Chores.gg, which won $50K from Meta for "Best Implementation of Passthrough Camera Access with AI." This work also led to presenting alongside Snap at AWE 2025, which had over 5,000 participants.